The History of the Computer

Computer are unlike some other inventions in that no single person invented them and the idea was not born at a definite moment in time. In fact, computer have been developing since the mid-seventeenth century and continue to evolve more than three centuries later. In their original form, computers were wood-and-metal calculators used for mathematics and science. By the mid-20th century, they had become giant electronic machines that occupied entire moms. In the early 21st century many "computers" are not even recognizable as computers: they are the invisible "brains" built into such electronic devices as cell phones, MP3 music players, and televisions.Mechanical Calculators

Programming Pioneers

Electronics, a way of controlling machines using electricity, was invented at the beginning of the 20th century; earlier calculators and computers were entirely mechanical (made from wheels, gears, levers, and so on). Calculators and computers differ in the amount of human direction they need to operate. Whereas a calculator merely carries out actions one by one at the direction of an individual, a computer can perform a sequence of operations with little or no human intervention. The series of instructions a computer follows is called a program.

Among the first to make practical calculating machines, like the ones Babbage had envisaged, were American inventor William Seward Burroughs (1857-1898) and statistician Herman Hollerith (1860-1929). After developing a simple, mechanical calculator, Burroughs formed a company that soon became the biggest manufacturer of adding machines in the United States. The company later moved into making general office machines, such as typewriters and computers. Herman Hollerith’s work led in a similar direction. While compiling data for the U.S. Census in 1880, he realized he could make a machine that would do the job for him. He later founded the company that in 1924 was renamed International Business Machines (IBM), a pioneer in the computer industry during the 20th century.

Electronic Computer

In the 1920s, a U.S. government scientist, Vannevar Bush (1890-1974), began building complex mechanical calculators known as analog computers. These unwieldy contraptions used rotating shafts, gears, belts, and levers to store numbers and carry out complex calculations. One of them, Bush's Differential Analyzer, was effectively a gigantic abacus, the size of a large room. It was used mainly for military calculations, including those needed to aim artillery shells.Analog computers led to digital machines, in which numbers were stored electrically in binary form, the pattern of zeros and ones invented by Leibniz. A prototype binary computer was developed in 1939 by U.S. physicist John Atanasoff (1903-1995) and electrical engineer Clifford Berry (1918-1963). The first major digital computer-one that stored numbers electrically instead of representing them with wheels and belts-was the Harvard Mark I. It was completed at Harvard University in 1944 by mathematician Howard Aiken (1900-1973) and used 3,304 relays, or telephone switches, for storing and calculating numbers. Two years later, scientists at the University of Pennsylvania built ENIAC, the world's first fully electronic computer (see box, The First Electronic Computer). Instead of relays, it used almost eighteen thousand vacuum tubes, also known as valves, which operated more quickly. According to its operating manual, "The speed of the ENIAC is at least 500 times as great as that of any other existing computing machine."

The Transistor Age

Switches, or devices that can be either "on" or "off," can be considered the brain cells of a computer: each one can repre-sent a single binary-zero or one. The more switches a computer has, the more numbers it can store; the faster the switches flick on or off, the faster the computer operates. Vacuum tubes were faster switches than relays, but each one was the size and shape of an adult's thumb; eighteen thousand of them took up a huge amount of space. To work properly, vacuum tubes had to be permanently heated to a high temperature, so they consumed enormous amounts of electricity. Computers were evolving quickly during the 1940s, largely driven by military needs. Yet the size, weight, and power consumption of vacuum tubes had become limitations. Putting a computer in an airplane or a missile was impossible; a better kind of switch was needed.Toward the end of the 1940s, this problem was solved by three scientists at Bell Telephone Laboratories (Bell Labs) in New Jersey. John Bardeen (1908-1991), Walter Brattain (1902-1987), and William Shockley (1910-1989) were trying to develop an amplifier that would boost telephone signals so they could travel much farther. The device they invented in late 1947-the transistor-turned out to have a more important use in computer switches. A single transistor was about as big as a pea and used virtually no elec-tricity, so computers made from thou-sands of transistors were smaller and more efficient than those made from vacuum tubes.

Originally, transistors had to be made one at a time and then hand-wired into complex circuits. Working independently, two American electrical engineers, Jack Kilby (1923-2005) and Robert Noyce (1927-1990), devised a better way of making transistors, called an integrated circuit, in 1958. Their idea enabled thousands of transistors-and the intricate connections between them-to be manufactured in miniaturized form on the surface of a piece of silicon, one of the chemical elements of sand.

Commercial Computer

In 1943, before transistors were invented, IBM executive Thomas Watson, Sr., had reputedly quipped: "I think there's a world market for about five computers." Within a decade, his own company proved him wrong-using vacuum tubes to manufacture its first general-purpose computer, the IBM 701, for 20 different customers. The arrival of transistors changed everything, making companies such as IBM able to develop increasingly affordable business machines from the 1950s onward.Until that decade, most of the advances in computing had come about through improvements in hardware-the mechanical, electrical, or electronic components from which computers are made. During the 1950s, advances were also made in software-the programs that control how computers operate. Much of the credit goes to Grace Murray Hopper (1906-1992). A mathematician who divided her time between the U.S. Navy and several large computer corpo-rations, Hopper is remembered as a pioneer of modern computer programming. She invented the first compiler, a computer program that "translates" English-like commands that people understand into binary numbers that computers can process, in the 1950s.

Even the invention of the transistor did not make computers affordable enough for most people. The world's first fully transistorized computer, the PDP-1 made by Digital Equipment Corporation, still cost approximately $120,000 when it was launched in 1960. Toward the end of the 1960s, however, another break-through was made in electronics; it changed everything. Robert Noyce, coinventor of the integrated circuit, and Gordon Moore (1929-) formed a company called Intel in 1968. The following year, one of Intel's engineers, Marcian Edward (Ted) Hoff (1937-), developed a way of making a powerful kind of integrated circuit that contained all the essential components of a computer. It was the microprocessor, popularly known as a microchip or silicon chip.

Microchips were no bigger than a fingernail and, during the early 1970s, found their way into various electronic devices, including digital watches and pocket calculators. In the mid-1970s, electronics enthusiasts started using microprocessors to build their own home computers. In 1976, two California hob-byists, Steve Jobs (1955-) and Steve Wozniak (1950-), used this approach to develop the world's first easy-to-use personal "microcomputers": the Apple I and Apple II.

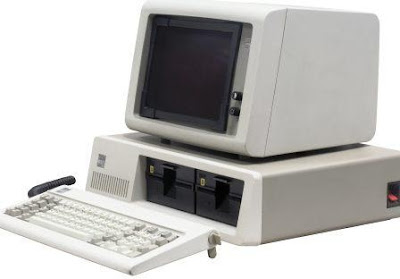

Many other companies launched microcomputers, all of them with incompatible hardware and software. Apple's machines were so successful that, by 1981, IBM was forced to launch its own personal computer (PC), using software developed by the young, largely unknown Bill Gates (1955-) and his then tiny company, Microsoft. During the 1980s, most personal computers were standardized around IBM's design and microsoft's software. This was extremely important for businesses in particular, as employees needed to be able to share information easily.

User-Friendly Computer

Users needed not just compatibility but also ease of operation. Most of the early computer programmers had been highly qualified mathematicians; ordinary people were less interested in how computers worked than in what they could actually do. One inventor who recognized this immediately was American computer scientist Douglas Engelbart (1925-). During the 1960s, he pioneered a series of inventions that made computers more "user-friendly," the best known of which is the computer mouse.Engelbart’s ideas were taken up in the early 1970 at the Palo Alto Research Center (PARC), a laboratory in California that was then a division of the Xerox Corporation. Xerox had made its name and fortune in the 1960s, manufacturing photocopiers invented by Chester Carlson (1906-1968) in 1938. Executives at Xerox believed the arrival of computers heralded a new "paperless" era in which photocopiers and the like might become obsolete. Consequently, Xerox began developing revolutionary, easy-to-use office computers to ensure that it could remain in business long into the future.

After visiting Xerox PARC, Apple's head, Steve Jobs, began a project to develop his own easy-to-use computer, initially called PITS (Person In The Street). This eventually evolved into Apple's popular Macintosh (Mac) computer, launched in 1984. When Microsoft incorporated similar ideas into its own Windows software, Apple was unable to stop the Redmond, Washington, company despite a lengthy court battle. The ultimate victors were computer users, who have seen an enor-mous improvement in the usability of computers since the mid-1980s.

Modern Computer

From the abacus to the PC, computers had been largely self contained machines. That began to change in the 1980s, when the arrival of standardized PCs made connecting computers into networks easier. Businesses, schools, universities, and home users found they could use networked computers to share information more easily than ever before. More and more people connected their computers, mainly using the public telephone system, to form what is now a gigantic worldwide network of computers called the Internet.During the late 1980s, British computer scientist Tim Bemers-Lee (1955-) pioneered an easy way of sharing information over the Internet that he named the World Wide Web. Since then, the Web has proved to be one of the most important communication technologies ever invented. Apart from information sharing, it has helped people create new businesses, such as the popular auction Web site eBay, founded by American entrepreneur Pierre Omidyar (1967-) in 1995. In addition to offering new business opportunities, the Internet is also helping computing to evolve. One notable example of this evolution is the Linux operating system, originally created by Finnish computer programmer Linus Torvalds (1969-) in 1991. This software was developed by thousands of volunteers working together over the Internet.

Collaboration via the Internet is one of the most important aspects of modem computing. Another is convergence: a gradual coming together of computers and other communication technologies. Telephones, cameras, televisions, computers, stereos, and sound recording equipment were once entirely separate. Now, all these technologies can be incorporated into a single pocket-size device such as a cell phone. Collaboration and convergence indicate that computer technology is continually evolving.

Source: Inventors and Inventions (Fuller, R. Buckminster)

0 komentar:

Post a Comment